- 15 Nov 2024

- 5 Minutes to read

- Print

- DarkLight

- PDF

Advanced Settings

- Updated on 15 Nov 2024

- 5 Minutes to read

- Print

- DarkLight

- PDF

Panoply provides a set of advanced settings for you to customize the collection from your data sources.

Click on Advanced Settings to configure the available advanced settings. This list is not all of the advanced settings that may be available. See the individual Data Source documents for detailed descriptions of the advanced settings that are applicable.

Schema

The schema field allows you to select the name of the target schema that Panoply will use to write the tables. If your data warehouse is built on Amazon Redshift, the default schema is public. If your data warehouse is built on Google BigQuery, the default schema is panoply. Once you begin collection, you cannot change the schema. On Redshift, if you want to use a different schema, you will need to add the data source again. For more, see Database Schemas. This setting applies to most data sources.

Destination

The destination defines the table(s) where the incoming data is stored. All alphabetic characters are converted to lowercase.

Destination Prefix

The destination prefix is used to define the table(s) where the incoming data is stored. Panoply defines a default destination prefix, however, users can change that default. All alphabetic characters are converted to lowercase.

Primary Key

The primary key is a field or combination of fields that Panoply uses for deduplication when collecting data. If the incoming data has an id field Panoply will recognize it and make it the primary key. The way Panoply stores the primary key differs based on whether your data warehouse is built in Redshift or BigQuery. To learn more about primary keys, see Primary Keys

Incremental Key/Load

The Incremental Key is used to limit the information that is pulled to what was updated since the last collection and then only update the rows that have been changed. For more, see Incremental Loads. This applies to several data sources.

Exclude

Users can add a list of attributes to exclude from the collection process, if there are specific types of data (such as irrelevant or sensitive data) that you want to exclude from your Panoply data warehouse. Excluding attributes that are not necessary to the later data analysis can also speed up other processes in the data analysis pipeline.

To exclude a nested attribute, use dot notation, such as name.firstname.

You can also exclude nested data, but it should be noted that this will only exclude nested data objects, not nested arrays of objects.

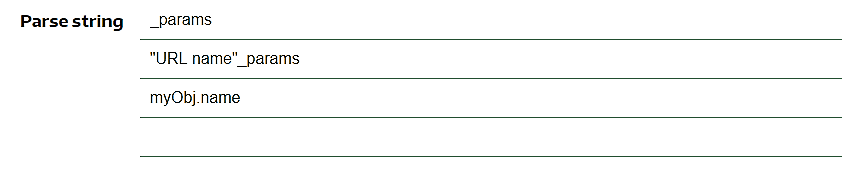

Parse string

By default, Panoply converts first-level JSON objects into table columns and stores nested JSON as strings. However, you can choose to treat JSON text attributes as JSON objects by entering JSON text attributes to be parsed in the Parse string field. This applies only to data warehouses built on Redshift.

For example, assume your source data included this JSON object that would be added to the users table: {"phone":"(987) 555-4321", "address":"{"street":"123 Main Street", "city":"Lincoln", "state":"Iowa", "country":"USA"}"}. If you do not enter anything into Parse string, Panoply will create a phone column and an address column, and will store the nested JSON blob as a string in the address column. If you wanted to parse the address attribute, you would type address into the Parse string field. This would create a users_address sub-table with street, city, state, and country columns.

As you enter JSON text attributes in the Parse string field, another line to type additional text attributes appears automatically. Each JSON attribute that you want to parse should be entered into its own row. To select a nested attribute, use dot notation, such as clients.billing.

Truncate table

Truncate deletes all the current data stored in the destination tables, but not the tables themselves. Afterwards Panoply will recollect all the available data for this data source.

Schema Lock

When selected, any new column, change to a data type or new table will not be addressed by the ingestion process. Any incompatible data will be stored as null and the data source will not fail when encountering any incompatibility. This feature is only available with the upsert load strategy and for data sources with default and user defined primary keys or datasets with id field in them.

This feature can be enabled only after at least one successful collection of the data source

Load Strategy

Users can change the load strategy of the data sources and choose one of the following options:

- Upsert (deafult) - Every record that already exists in the table will be updated while new records will be inserted. The identification of an existing record is done based on its default or configured primary key.

- Append - All the records will always be inserted into the table. Any default or configured primary keys will be saved in the table but will not be used during the ingestion process.

When selecting the append load strategy, unwanted duplicates might occur when data collections fail or when reginesting the same data set.

Date Format

Users can define if the incoming date fields are set as month-first or day-first. For example, the date 2024-04-01 will be identified as April 1st for month-first and January 4th for day-first. This configuration is critical for Panoply to identify a field as a date field and the date in the correct format. By default, Panoply will treat all dates as month-first dates.

The user can change the default behavior for all date fields and/or individual field paths for more specific configuration.

The configuration is using dot notation and arrays can be identified with [*].

Nested Data

Users can define if they want to ingest nested objects as one of three options:

- One-to-many tables (default)

- Flatten them to top-most parent table

- Ingest as JSON data type

- Pivot data in subtable

Read more about the different configurations here.

The user can change the default behavior for all nested objects and/or individual field paths for more specific configuration.

The configuration is using dot notation and arrays can be idetnified with [*].

Arrays cannot be flattened or ingested as JSON and they will always be ingested as one-to-many tables. Nested objects within arrays can be flattened up to the array one-to-many table

.png)